China did not conspire with the Democrats to steal midterm elections, contrary to claims made by YouTubers. Saudi Arabia is not either.

There is no evidence that “massive amounts of fraud” were reported to Pennsylvania in 2020. Nor is there any evidence that electronic voting machines will rig the results next week, as one conservative activist stated in a video.

Disinformation watchers are concerned that YouTube’s daring attempt to counter misinformation on its platform, Google, has led to blind spots. They are especially concerned about TikTok, which offers very short videos and Spanish-language videos on YouTube, as well as the YouTube-like service.

The New York Times interviewed more than a dozen researchers to find out how difficult it is to understand the situation. They said that they have limited access to data and that it takes too long to screen videos.

“It’s easier to do research with other forms of content,” said Geor Craig, head of digital integrity at the Institute for Strategic Dialogue, or ISD, a nonprofit that combats extremism and disinformation. YouTube is now in a position to make it easier for them.

While Twitter and Facebook are closely watched for disinformation issues, YouTube has often remained unnoticed despite its vast influence. It is the second most used search engine on the internet and has a reach of more than two billion people.

YouTube banned videos that were alleged to have been fraudulent in the 2020 presidential election. However, it has not adopted a similar policy regarding midterm elections. This has been criticized by some watchdogs.

“You can’t build a sprinkler system after the building is on fire,” said Angelo Caruson, president of Media Matters for America, a nonprofit that monitors conservative disinformation.

YouTube spokeswoman Effie Chui said that YouTube disagreed with some criticisms of the company’s work to combat disinformation. Effie Choi, spokeswoman for YouTube, stated in a statement that the company has invested heavily in its policies and systems in order to combat election-related disinformation using a multi-tiered approach.

Status of the 2022 midterm election

Tuesday, November 8th is election day

YouTube stated it had removed a few videos that the New York Times had indicated violated its policies regarding election integrity and spam. The company also said that it had determined other content did not violate its policies. The company also claimed that 122,000 videos containing misinformation were removed between April and June.

“Our community guidelines prohibit misleading voters about how to vote, encouraging interference in the democratic process and falsely claiming that the 2020 US election was rigged or stolen,” Ms Choi said. “These policies apply globally, regardless of language.”

YouTube’s stance on political misinformation has been reaffirmed following the 2020 presidential elections. YouTubers were quick to broadcast the January 6, 2020 attack on the Capitol. Within 24 hours, the company began reprimanding those who propagated a lie about the 2020 election being stolen and revoked President Donald J. Trump’s downloading privileges.

YouTube has pledged $15 million to hire more 100 content moderators to help Brazil’s midterm elections and presidential elections. The company also has more than 10,000 moderators located around the globe, according to a person who was not authorized to speak on the matter. To discuss hiring decisions.

According to a source familiar with the matter, the company has audited the recommendation algorithm to ensure it doesn’t suggest political videos from unverified sources. YouTube also established an election warfare room with dozens more officials. The person said that YouTube was quickly preparing the company to remove any videos or live broadcasts that violate its policies for Election Day.

The researchers suggested that YouTube could have been more proactive in preventing false narratives from continuing to circulate after the election.

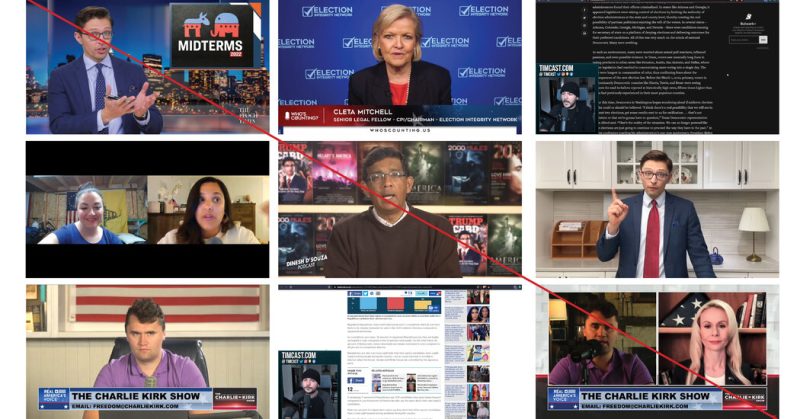

YouTube’s most popular conspiracy theory about election was the unsubstantiated claim that some Americans deceived them by stuffing ballot boxes full of multiple ballot papers. This idea was inspired by a discredited conspiracy-filled documentary called 2000 Mules, which claimed that Mr Trump lost re-election due to illegal votes.

ISD reviewed YouTube shorts and found at minimum a dozen videos that echoed the allegations of ballot traficking for “2000 Mules,” according to links shared with The New York. Times. The viewership of the videos varied widely. It ranged from a few dozen to tens and thousands. Two videos had links to the same movie.

ISD discovered the videos by keyword searches. The list was not meant to be exhaustive. However, “these short films were identified easily, which indicates that they are still readily accessible,” three ISD researchers wrote. Some videos show men speaking to the camera in a car or house, reinforcing their belief in the film. Other videos promote the documentary with no personal comments.

The non-profit group also looked at YouTube Shorts and TikTok competitors, and found that both had posted similar types misinformation.

Ms Craig of ISD said that nonprofit groups such as hers were working hard to combat misinformation on social media platforms of tech giants. This despite the fact that these companies have billions in dollars and thousands of content moderators.

She said that “our teams are working through this slump in well-resourced entities who can do this type of work.”

According to two people who are familiar with the subject, even though YouTube videos are short and only last a minute, they are much more difficult to view than longer videos.

Artificial intelligence is used by the company to review uploaded content. One person stated that some AI systems can detect signs of content problems in minutes while others take hours. YouTube began working on a solution to make shorter videos more effective.

According to research and analysis by, YouTube also has struggled to control misinformation in Spanish. Media mattersAnd the equisIt is a non-profit organization focusing on the Latino community.

Nearly half of Hispanics are Hispanic. switched to youtubeJacobo Liccona from Equis, a researcher, said that news is on the rise more often than any other social network. This platform allows viewers to access a wealth of disinformation as well as unilateral political propaganda, he explained, with Latin American influencers in countries such Mexico, Colombia, Venezuela and entering US politics.

Many of them chose familiar accounts such as false claims that dead people voted in the United States and translated them into Spanish.

According to two people familiar, YouTube asked a group that monitors Spanish-language misinformation on YouTube to access its monitoring data in October. They said that the company sought outside help to monitor its platform and that the group was concerned YouTube hadn’t made the necessary investments in Spanish content.

YouTube claimed that it has reached out and received more information from subject matter experts before the midterms. YouTube also stated that it has made substantial investments in combating harmful misinformation across all languages, including Spanish.

YouTube has many tedious processes to edit videos in Spanish. The company employs Spanish-speaking moderators who can help teach AI systems and screen content. One employee said one of the AI methods involved transcribing videos and reviewing text. Google Translate can be used to convert text from Spanish into English. The person stated that these methods were not always accurate due to colloquial or idiomatic expressions.

YouTube claimed that its systems also evaluated visual cues and metadata in Spanish-language videos. It also stated that its AI was capable of learning new trends such as developing idioms or slang.

Researchers in English found allegations of electoral fraud by famous people, including Charlie Kirk, Dinesh Souza (who invented “2000 Mules”) and Tim Paul. Paul is a YouTube personality with 1.3million followers who have been known to cast doubts on the results. 2020 elections and questions about the use of ballot boxes.

Kayla Gugarty (deputy director of research at Media Matters), said in an interview that “one of the things that bothers my most is that people watching polls are being praised, and encouraged on YouTube.” “This is a clear example of something moving online into the real world and potentially causing harm.”

Source link

[Denial of responsibility! reporterbyte.com is an automatic aggregator of the all world’s media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, all materials to their authors. If you are the owner of the content and do not want us to publish your materials, please contact us by email – reporterbyte.com The content will be deleted within 24 hours.]