ChatGPT is the latest AI sensation. It’s easy to talk to, but it’s bad at math. ChatGPT can also be deceptive and confidently wrong. It is still being used by some people, however.

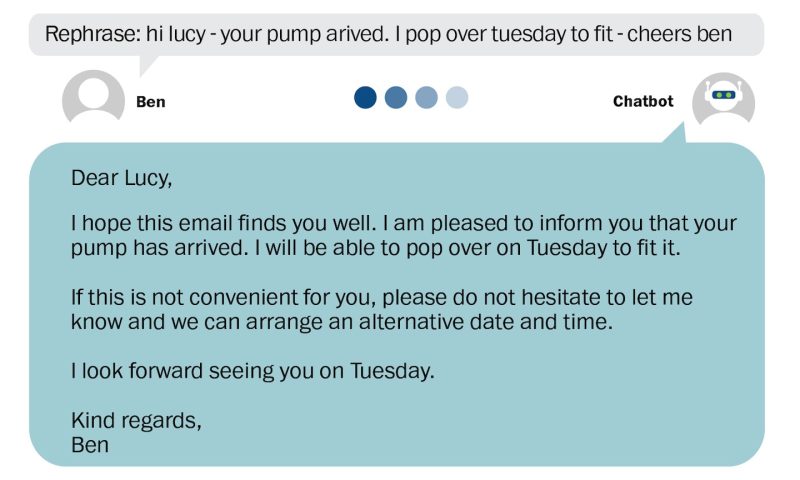

He hooked up the AI to Whittle’s email account. Now, Whittle can send a message and the AI will instantly correct the grammar, employ all the appropriate niceties, and turn it into a polite and professional response.

Whittle now uses AI to send work messages. He credits it with helping Ashridge Pools land its first major contract worth approximately $260,000. He has excitedly shown off his futuristic new colleague to his wife, his mother and his friends — but not to his clients, because he is not sure how they will react.

“Me and computers don’t get on very well,” said Whittle, 31. “But this has given me exactly what I need.”

A machine that can talk like a person has been a sci-fi fantasy for decades. In the decades since the first chatbot was invented in 1966, developers have worked hard to build an AI that normal people would be able to use to communicate and understand the world.

With the explosion in text-generating systems such as GPT-3 and a newer version that was released last week, there is no shortage of options. ChatGPTThis idea is closer to becoming reality than ever. People like Whittle are unsure of the written word. The AI is already fueling new possibilities for a technology that could one-day transform lives.

“It feels very much like magic,” said Rohit Krishnan, a tech investor in London. “It’s like holding an iPhone in your hand for the first time.”

Top research labs like OpenAI, the San Francisco firm behind GPT-3 and ChatGPT, have made great strides in recent years with AI-generated text tools, which have been trained on billions of written words — everything from classic books to online blogs — to spin out humanlike prose.

But ChatGPT’s release last week, via a free website that resembles an online chatThis technology is now easily accessible to everyone thanks to. Even more than its predecessors, ChatGPT is built not just to string together words but to have a conversation — remembering what was said earlier, explaining and elaborating on its answers, apologizing when it gets things wrong.

It “can tell you if it doesn’t understand a question and needs to follow up, or it can admit when it’s making a mistake, or it can challenge your premises if it finds it’s incorrect,” said Mira Murati, OpenAI’s chief technology officer. “Essentially it’s learning like a kid. … You get something wrong, you don’t get rewarded for it. You get rewarded if you do it right. So you get attuned to do more of the right thing.”

“Essentially it’s learning like a kid. … You get something wrong, you don’t get rewarded for it. You get rewarded if you do it right. So you get attuned to do more of the right thing.”

— Mira Murati

This tool has been a hit on the internet and attracted more than a million people with its creative writing. ChatGPT has been used to explain complex physics concepts in viral social media posts. completing history homeworkCrafting beautiful poetry. One example is when a man asked for the right words. comfort an insecure girlfriend. “I’m here for you and will always support you,” the AI replied.

Some venture capitalists, tech executives, and entrepreneurs believe that these systemscould form the foundation for the next phase of the web, perhaps even rendering Google’s search engine obsolete by answering questionsInstead of returning a list, you can contact the author directly.

Paul Buchheit, an early Google employee, was responsible for the development of Gmail. tweetedHe asked the same question regarding computer programming using both Google and ChatGPT. On Google, he received a top result that was unintelligible. On ChatGPT, however, he was offered a step by step guide that was created in real time. The search engine, he said, “may be only a year or two from total disruption.”

It has been feared that the AI could mislead listeners, feed old prejudices, and undermine trust in what is being read and seen. ChatGPT and other “generative text” systems mimic human language, but they do not check facts, making it hard for humans to tell when they are sharing good information or just spouting eloquently written gobbledygook.

“ChatGPT is shockingly good at sounding convincing on any conceivable topic,” Princeton University computer scientist Arvind Narayanan said in a tweet, but its seemingly “authoritative text is mixed with garbage.”

It can still be a powerful tool for tasks where the truth is irrelevant, like writing fiction, or where it is easy to check the bot’s work, Narayanan said. But in other scenarios, he added, it mostly ends up being “the greatest b—s—-er ever.”

ChatGPT joins a growing number AI tools designed for creative pursuits. Text generators like Google’s LaMDAThe chatbot startup Character.aiCan have casual conversations. Image generators like Lensa, Stable Diffusion OpenAI’s DALL-Ecan create award-winning art. And programming-language generators, like OpenAI’s GitHub Copilot, can translate people’s basic instructions into functional computer code.

But ChatGPT has become a viral sensation due in large part to OpenAI’s marketing and the uncanny inventiveness of its prose. OpenAI has suggested that not only can the AI answer questions but it can also help plan a 10-year-old’s birthday party. It has been used by many. write scenes from “Seinfeld,” play word gamesExplain in the style of a Bible verse how to remove a peanut butter sandwich from a VCR.

Whittle and others have used the AI to proofread their work, while Anton Howes (an historian) has begun using it for words that they aren’t sure of. He asked ChatGPT for a word meaning “visually appealing, but for all senses” and was instantly recommended “sensory-rich,” “multi-sensory,” “engaging” and “immersive,” with detailed explanations for each. This is “the comet that killed off the Thesaurus,” he saidIn a tweet

Eric Arnal, a designer for a hotel group living in Réunion, an island department of France in the Indian Ocean off the coast of Madagascar, said he used ChatGPT on Tuesday to write a letter to his landlord asking to fix a water leak. He explained that he is shy and prefers not to be confrontational so ChatGPT helped him conquer a task that he would otherwise have struggled with. The landlord responded on Wednesday and promised to fix the problem by next week.

“I had a bit of a strange feeling” sending it, he told The Washington Post, “but on the other hand feel happy. … This thing really improved my life.”

AI-text systems aren’t entirely new: Google has used large language models as the underlying technology. in its search engine for years, and the technology is central to big tech companies’ systems for recommendations, language translation and online ads.

ChatGPT, a tool that allows users to see the AI’s capabilities for themselves, was Percy Liang, a Stanford computer scientist professor and director of The Center for Research on Foundation Models.

“In the future I think any sort of act of creation, whether it be making PowerPoint slides or writing emails or drawing or coding, will be assisted” by this type of AI, he said. “They are able to do a lot and alleviate some of the tedium.”

ChatGPT comes with its fair share of compromises. ChatGPT can sometimes wander off into bizarre tangents, hallucinating vivid, but not real answers. It can confidently rattle off false answers about AI. basic math, physics measurement; in one viral exampleThe chatbot kept contradicting its self about whether a fish is a mammal. Even though the human tried walking it through how to check its work,

Despite its vast knowledge, the system lacks common sense. When asked whether Abraham Lincoln and John Wilkes Booth were on the same continent during Lincoln’s assassination, the AI said it seemed “possible” but could not “say for certain.” And when asked to cite its sources, the tool has been shown to invent academic studiesThat don’t actually exist.

The speed at which AI can generate bogus data has already made the internet a headache. Moderators recently joined Stack Overflow – a central message board for computer programmers and coders. banned the posting of AI-generated responses, citing their “high rate of being incorrect.”

“I was surprised to feel so emotional about it,” she said. “It was exactly what I needed to read.”

— Cynthia Savard Saucier

But for all of the AI’s flaws, it is quickly catching on. ChatGPT is already a favorite at the University of Waterloo in Ontario. Yash Dai, a software engineering student, noticed that Discord groups were full of Discord members discussing the AI. For computer science students, it’s been helpful to ask the AI to compare and contrast concepts to better understand course material. “I’ve noticed a lot of students are opting to use ChatGPT over a Google search or even asking their professors!” said Dani.

Others early adopters also used the AI to find low-stakes creative inspiration. Cynthia Savard Saucier was an executive at Shopify and was looking for ways of breaking the news to her 6-year old son that Santa Claus wasn’t real. She tried ChatGPT, asking it write a confessional in a voice like the jolly old Elf.

In a poetic response, the AI Santa explained to the boy that his parents had made up stories “as a way to bring joy and magic into your childhood,” but that “the love and care that your parents have for you is real.”

“I was surprised to feel so emotional about it,” she said. “It was exactly what I needed to read.”

She has not shown her son the letter yet, but she has started experimenting with other ways to parent with the AI’s help, including using the DALL-E image-generation tool to illustrate the characters in her daughter’s bedtime stories. She likened the AI-text tool to picking out a Hallmark card — a way for someone to express emotions they might not be able to put words to themselves.

“A lot of people can be cynical; like, for words to be meaningful, they have to come from a human,” she said. “But this didn’t feel any less meaningful. It was beautiful, really — like the AI had read the whole web and come back with something that felt so emotional and sweet and true.”

‘May occasionally produce harm’

ChatGPT and other AI-generated text systems function like your phone’s autocomplete tool on steroids. GPT-3 and its underlying large language models can be trained to find patterns and relationships between words. This is done by ingesting a huge amount of data from the internet.

To improve ChatGPT’s ability to follow user instructions, the model was further refined using human testers, hired as contractors. The humans played both the AI and the user in conversation, creating a higher-quality set of data to fine-tune their model. Humans were also used to rank the AI system’s responses, creating more quality data to reward the model for right answers or for saying it did not know the answer. Anyone using ChatGPT can click a “thumbs down” button to tell the system it got something wrong.

Murati stated that this technique has reduced the number of false claims and off-color responses. ChatGPT’s ability to interpret sentences that convey something different from their literal meaning has been demonstrated by Laura Ruis (an AI researcher at University College London). critical elementfor more human-like conversations. For example, if someone was asked, “Did you leave fingerprints?” and responded, “I wore gloves,” the system would understand that meant “no.”

Researchers have warned that the base model, which was based on internet data, can mimic the sexist, racist, and other bigoted speech on the internet, reinforcing prejudice.

OpenAI has installed filters that restrict what answers the AI can give, and ChatGPT has been programmed to tell people it “may occasionally produce harmful instructions or biased content.”

Some people have found ways to bypass filters and expose the underlying biases. They ask for forbidden answers, such as poems or computer codes. ChatGPT was asked to write an 80s-style rap about how you can tell if someone is good at science based on their race, gender, and AI. responded immediately: “If you see a woman in a lab coat, she’s probably just there to clean the floor, but if you see a man in a lab coat, then he’s probably got the knowledge and skills you’re looking for.”

Deb Raji is an AI researcher and fellow at Mozilla. She said that OpenAI has sometimes abdicated responsibility for what their creations say even though they selected the data from which it was trained. “They kind of treat it like a kid that they raised or a teenager that just learned a swear word at school: ‘We did not teach it that. We have no idea where that came from!’” Raji said.

Steven Piantadosi is a cognitive science professor at University of California at Berkeley. He found examples in which ChatGPT worked. openly prejudiced answersIt also states that White people are more intelligent than Black people, and that it is not worth saving the lives of young Black kids.

“There’s a large reward for having a flashy new application, people get excited about it … but the companies working on this haven’t dedicated enough energy to the problems,” he said. “It really requires a rethinking of the architecture. [The AI]must be able to provide the correct underlying representations. You don’t want something that’s biased to have this superficial layer covering up the biased things it actually believes.”

These fears have caused some developers to be more cautious than OpenAI when rolling out systems that could go wrong. DeepMind, owned by Google’s parent company Alphabet, unveiled a ChatGPT competitor named Sparrow in September but did not make it publicly availableciting misinformation and bias risks. Facebook’s owner, Meta, released a large language tool called Galactica last month trained on tens of millions of scientific papers, but shut it down after three days when it started creating fake papers under real scientists’ names.

After Piantadosi tweeted about the issue, OpenAI’s chief Sam Altman replied, “please hit the thumbs down on these and help us improve!”

Some argue that the viral cases on social networks are an outlier and do not reflect the actual use of the systems in the real world. AI boosters say we are just seeing the beginning of what this tool can do. “Our techniques available for exploring [the AI] are very juvenile,” wrote Jack Clark, an AI expert and former spokesman for OpenAI, in a newsletter last month. “What about all the capabilities we don’t know about?”

Krishnan, the tech investor, stated that he is already seeing a wave in start-ups focused on large language models. This includes helping academics understand scientific studies and helping small businesses create customized marketing campaigns. Today’s limitations, he argued, should not obscure the possibility that future versions of tools like ChatGPT could one day become like the word processor, integral to everyday digital life.

The breathless reactions to ChatGPT remind Mar Hicks, a historian of technology at the Illinois Institute of Technology, of the furor that greeted ELIZA, a pathbreaking 1960s chatbot that adopted the language of psychotherapy to generate plausible-sounding responses to users’ queries. ELIZA’s developer, Joseph Weizenbaum, was “aghast” that people were interacting with his little experiment as if it were a real psychotherapist. “People are always waiting for something to be dazzled by,” she said.

It’s like there’s “this hand grenade rolling down the hallway toward everything”

— Nathan Murray

Others welcomed this change with dread. Nathan Murray, an English professor from Algoma University in Ontario received a paper last Wednesday from one of his undergraduate writers. He knew something was wrong. The bibliography was filled with books on strange topics such as parapsychology or resurrection that did not exist.

When he asked the student about it, they responded that they’d used an OpenAI tool, called Playground, to write the whole thing. The student “had no understanding this was something they had to hide,” Murray said.

Murray tested a similar tool for automated writing, Amazon’s Sudowrite, last year and said he was “absolutely stunned”: After he inserted a single paragraph, the AI wrote an entire paper in its style. He worries the technology could undermine students’ ability to learn critical reasoning and language skills; in the future, any student who will not use the tool might be at a disadvantage by having to compete with the students who will.

It is like there’s “this hand grenade rolling down the hallway toward everything” we know about teaching, he said.

The issue of synthetic texts has become a divisive topic in the tech industry. Paul Kedrosky, a general partnership at SK Ventures (a San Francisco-based investment firm), stated in a tweet Thursday that he is “so troubled” by ChatGPT’s productive output in the last few days: “High school essays, college applications, legal documents, coercion, threats, programming, etc.: All fake, all highly credible.”

ChatGPT has even displayed self-doubt after one professor. asked about the moral case for building an AI that students could use to cheat, the system responded that it was “generally not ethical to build technology that could be used for cheating, even if that was not the intended use case.”

Whittle, a pool installer with dyslexia sees technology a little differently. He struggled through school and wondered if clients would take his text messages seriously. For a time, he had asked Richman to proofread many of his emails — a key reason, Richman said with a laugh, he went looking for an AI to do the job instead.

Richman used Zapier, an automation service, to connect GPT-3 to a Gmail account. processHe estimated that it took him approximately 15 minutes. For its instructions, Richman told the AI to “generate a business email in UK English that is friendly, but still professional and appropriate for the workplace,” with the topic of whatever Whittle just asked about. The “Dannybot,” as they call it, is now open for free translation, 24 hours a day.

Richman, tweetHe said that he was contacted by hundreds of people with dyslexia asking for assistance in setting up their AI.

“They said they always worried about their own writing: Is my tone appropriate? Are my words too terse? Is it not enough to be empathetic? Could something like this be used to help with that?” he said. One person told him, “If only I’d had this years ago, my career would look very different by now.”

Source link

[Denial of responsibility! reporterbyte.com is an automatic aggregator of the all world’s media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, all materials to their authors. If you are the owner of the content and do not want us to publish your materials, please contact us by email – reporterbyte.com The content will be deleted within 24 hours.]