As a commercially successful author once wrote, “The night is dark and full of terror, and the day is bright and beautiful and full of hope.” They are images fit for artificial intelligence, which like all technologies has its positive and negative sides.

Art models like stable spreadFor example, it led to extraordinary outpourings in creativity and power appsEven better! Completely new business models. Its open source nature also allows for bad actors to make use of it to be creative. DeepfakeWide – all the times Artists protest that it is profiting from their work.

What’s next for AI in 2023 Are the floodgates wide open or will regulation limit the negative effects of AI? Will powerful and transformative new AI forms emerge similar to what is already in existence? chatAutomation is deemed safe for disrupting industries

Expect to see more (and potentially more) AI applications for art creation

With success LensaThe AI-powered selfie app Prisma Labs, which has gone viral, can be found here. There will be many more selfie apps in this vein. He also expected them all to be deceived. Create NSFW imagesAnd l Disproportionate sexualization and changeThe appearance of a woman

Maximilian Jantz is a senior policy researcher at Mozilla Foundation. He believes that generative AI will be integrated into consumer technology to amplify the positive and negative effects of such systems.

Stable Diffusion was fed billions upon billions of images from Internet until it “learned to associate certain words or concepts with certain images.” Text-generating machines have been routinely tricked into producing misleading or offensive content.

Mike Cook, a member of the Knives and Paintbrushes open research group, agrees with Gahntz that generative AI will continue to prove a major — and problematic — force for change. He believes that 2023 should be the year when “generative AI finally puts its foot where its mouth is”.

TechCrunch: Prompted by Dream Studio, modeled and built by Stability AI in the free tool Dream Studio.

“It’s not enough to motivate a group of professionals [to create new tech] – For technology to become a long-term part of our lives, it has to either make someone a lot of money, or have a tangible impact on the daily lives of the general public.” with mixed success.”

Artists lead the effort to opt-out of datasets

Deviantart releasedA Stable Diffusion-based AI art creator that uses artwork from the DeviantArt Community. DeviantArt long-time residents voiced their disapproval at the art creator. They criticized the platform for not being transparent in how the uploaded art was used to train the system.

OpenAI and StabilityAI are the most popular systems. They claim that they have taken steps towards reducing the amount of malicious material their systems produce. Many generations on social media agree that there is much work to do.

“Data sets require active processing to address these issues and should be subject to significant scrutiny, including from communities that tend to get the short end of the stick,” Gantz said, comparing the process to ongoing contentious contention disputes in social media.

Stability AI is heavily funding the development and implementation of Stable Diffusion. Recently, it gave in to public pressure. This suggested that artists would be able to opt out from the data set used for training the next generation of Stable Diffusion models. The website HaveIBeenTrained.com will allow rights holders to request an opt out before training begins in a few days.

OpenAI does not offer this opt out mechanism. Instead, it prefers to partner with organizations such as Shutterstock to license portions its image galleries. But look at legalIt’s only a matter time before they follow the lead of Stability AI and the headwinds that propagandism faces.

The courts may eventually have to take the matter into their own hands. OpenAI, GitHub, Microsoft are all being created in the United States. lawsuitThey were accused of violating copyright laws by allowing Copilot, GitHub’s service that intelligently suggests code lines, to return licensed code sections without credit.

GitHub has added settings to prevent public code appearing in suggestions. Copilot also plans to introduce a feature to indicate the source code of code suggestions. These are however incomplete procedures. They are at the very minimum incomplete. One exampleThe co-pilot was compelled to release large amounts of copyrighted codes, including attribution scripts, due to the candidate setup.

Next year will be more critical, especially as the UK considers rules to remove the requirement that systems that were trained using public data not be used commercially.

Open-source and decentralized efforts will continue growing

A few AI companies dominated the AI market in 2022, particularly OpenAI and Stability AI. Gantz suggests that the pendulum might swing back towards open source in 2023, as the ability of building new systems transcends “powerful and resource-rich AI labs.”

He suggested that the community approach could allow for more scrutiny of systems being built and deployed. “If the models are open and if the datasets are open, that will enable more important research that has pointed out a lot of the flaws and harms associated with generative AI that are often very difficult to do.”

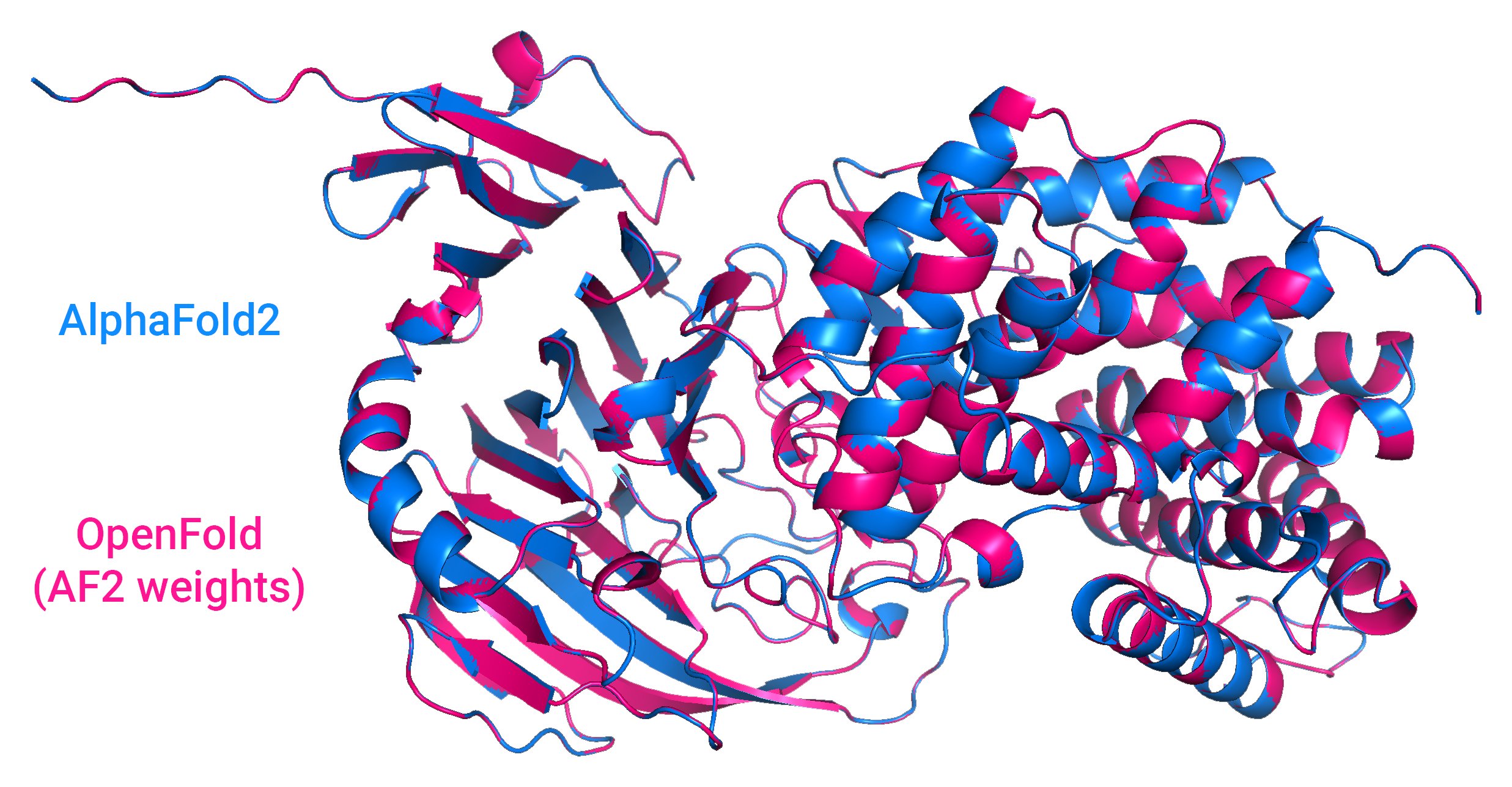

Credits for the image:OpenFold results, an open-source AI system that predicts shapes and proteins, are compared to DeepMind’s AlphaFold2.

Examples of such community-focused initiatives include Big Language Models by EleutherAI or BigScience, which is supported by AI startup Hugging Face. Stability AI funds many of the same communities as generation-focused music. HarmonyThen there’s the OpenBioMLA loose collection of biotechnology experiments.

While funding and expertise are still necessary to train and run cutting edge AI models, decentralized computing may pose a challenge to traditional data centers as open source efforts mature.

BigScience is making a move towards decentralized development by releasing the Petals open source project. Petals allows people to share their computational power, similar in Folding@home to run large AI languages models that would normally require either a high-end GPU, or a server.

“Modern generative models are computationally expensive to train and run. Some preliminary estimates put ChatGPT’s daily expenditures at around $3 million,” Chandra Bhagavatula, a senior research scientist at the Allen Institute for Artificial Intelligence, said via email. It is important to address this issue as ChatGPT is now more commercially available and accessible to more people.

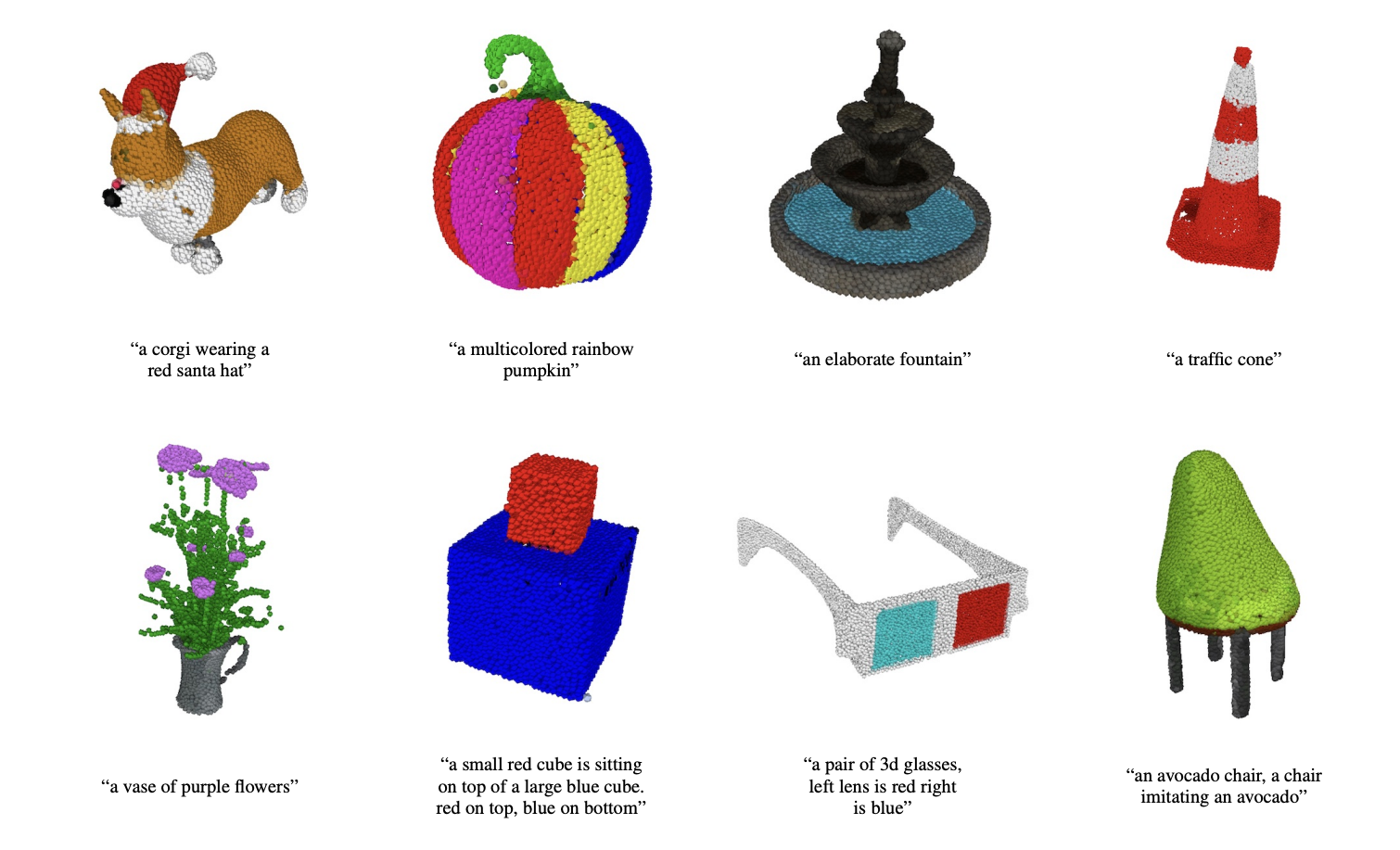

Chandra reminds us that the big labs will still have competitive advantages, as long as their methods and data are proprietary. OpenAI was released recently by me. point E, a model capable of creating 3D objects using a text prompt. OpenAI has made the model available for free, but it has not released or disclosed the Point-E training data.

Point E generates point clouds.

“I think the open source and decentralization efforts are totally worth it and will benefit more researchers, practitioners and users,” said Chandra. “However the best models are not yet available to a large amount of practitioners and researchers despite being open-source because of their resource limitations, it is still difficult to find them.

Artificial intelligence companies reverse course on upcoming regulations

Regulations like the European Union’s Artificial Intelligence Act could change how companies develop and use AI systems in the future. Other local efforts like the AI Employment Ordinance of New York City require AI and algorithmic technology for recruiting, promoting, and hiring to be reviewed for biases before they can be used.

Chandra considers these regulations necessary in light of the increasing technical flaws in artificial Intelligence, such as its tendency for false information to be factually revealed.

This makes it difficult for generative AI to be applied in many areas where errors can have enormous costs, such as healthcare. Additionally, incorrect information can be generated quickly. This creates problems around disinformation and disinformation.[And yet] AI systems are already making decisions with moral and ethical implications.”

Next year will only bring the threat of regulation, though — expect more wrangling over rules and court cases before anyone is fined or charged. But companies may still compete for positions in categories that are most beneficial from upcoming laws, such as risk categories in the AI law.

According to the current rule, AI systems are divided into one of four risk groups. Each has its own requirements and scrutiny levels. Systems in the higher risk category, “high risk” AI (such as credit scoring algorithms, robotic surgery applications), must meet certain legal, ethical and technical standards before they are allowed to enter the European market. The lowest risk category is “minimal or zero risk” and includes spam filters and AI-enabled videos. It only requires transparency commitments, such as making users aware they are interacting in an AI system.

Os Keyes Ph.D., a University of Washington student, expressed concern that companies would seek to minimize their risk in order to reduce their responsibilities and visibility to regulators.

“Regardless of this concern [the AI Act] Really the most positive thing I see on the table,” they said. “I haven’t seen much Anything outside Congress.”

However, investments are not guaranteed

Gahntz claims that even though an AI system is beneficial for most people, it can still be harmful for some. This is because there is still much to do before companies make it widely available. “There’s also a business case to all of this. If your model generates a lot of bad stuff, consumers won’t like it.” “But obviously, this is also about justice.”

It is unclear whether companies will be interested in this argument in the next year, particularly since investors seem keen to invest in AI-related projects.

Stability AI was created in the midst the Stable Diffusion controversy. StarchThe company has received notable backing from Lightspeed Venture Partners and Coatue at a value of more than $1 billion. OpenAI is He saidTo reach a total value of 20 billion dollars on entry Advanced conversationsTo raise more funds from Microsoft. (Formerly Microsoft invest$1 billion in OpenAI for 2019

These can be exceptions to this rule.

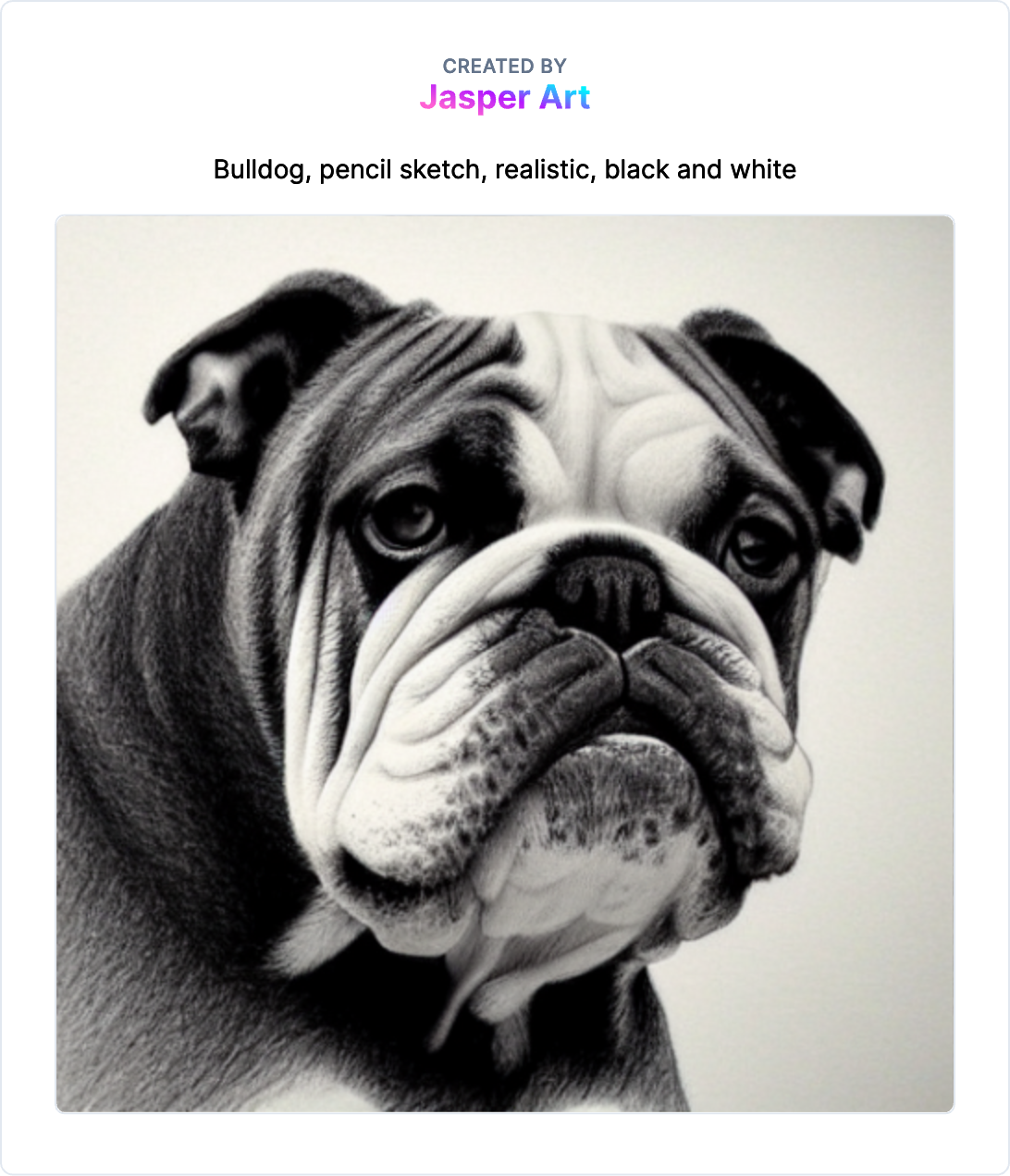

Credits for the image:jasper

Crunchbase has revealed that software-based AI companies are the most successful, in terms of money raised, other than self-driving company Cruise, Wayve and WeRide. Contents boxIt sells a service that uses artificial intelligence to make recommendations for web content. The round was $600 million. UniphoreThis company sells Conversation Analytics software (call centre reasoning metrics) as well as conversational assistants. real estate$400 million in February while, Hi spotThe platform, powered by AI, provides real-time data-driven recommendations to marketers and salespeople. arrest$248 Million in January

Investors may choose to invest in safer investments, such as automating customer complaints analysis or generating sales leads. However, these options are not as glamorous as generative AI. However, this does not mean that investors will not make large investments in high-profile players.

Source link

[Denial of responsibility! reporterbyte.com is an automatic aggregator of the all world’s media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, all materials to their authors. If you are the owner of the content and do not want us to publish your materials, please contact us by email – reporterbyte.com The content will be deleted within 24 hours.]